Ollama Internal Server Error

Overcoming Model Size Limitations with Smaller Alternative

Website Visitors:Introduction:

The rapid advancement of language models has led to the development of powerful tools like OpenWebUI, which enables users to interact with these models seamlessly. However, users may encounter errors when working with larger models, such as LLaMA3:70B or Gemma2:27B. One common issue is the “Ollama:500, message=‘Internal Server Error’” error, which can be frustrating and hinder productivity. In this article, we will explore the root cause of this error and provide a solution to overcome it.

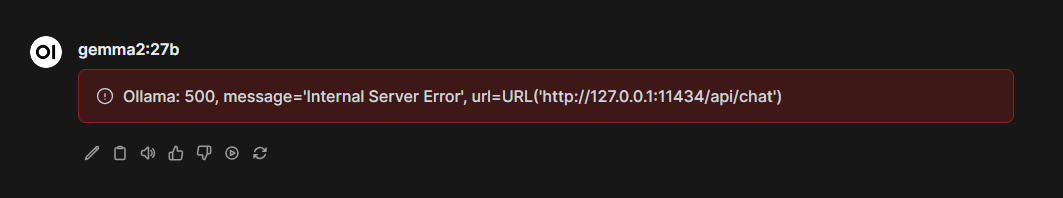

The Error: Ollama:500, message=‘Internal Server Error’

When using large language models with OpenWebUI, users may encounter the following error:

Ollama:500, message='Internal Server Error', url=URL('http://127.0.0.1:11434/api/chat')

This error typically occurs when the model size exceeds the available memory or computational resources. In the case of LLaMA3:70B and Gemma2:27B, their massive sizes (around 70GB and 27GB, respectively) can cause the error.

Root Cause: Model Size and Resource Constraints

The primary reason for this error is the large size of the models, which can lead to memory and computational resource constraints. When OpenWebUI attempts to load and process these massive models, it may exceed the available resources, resulting in the internal server error.

Solution: Using Smaller Models

One effective solution to overcome this error is to use smaller models that require fewer resources. Models like LLaMA3, Gemma, or Gemma2, which are around 5GB in size, can be used as alternatives. These smaller models can provide similar functionality without exhausting the available resources.

Why Smaller Models Work

Smaller models like LLaMA3, Gemma, or Gemma2 are designed to be more efficient and require fewer resources. They have been optimized to provide similar performance to their larger counterparts while being more lightweight. By using these smaller models, OpenWebUI can process requests without exceeding the available memory or computational resources, thereby avoiding the internal server error.

Conclusion:

In conclusion, the “Ollama:500, message=‘Internal Server Error’” error when using large language models with OpenWebUI can be overcome by using smaller models that require fewer resources. By understanding the root cause of the error and adopting smaller models, users can continue to leverage the power of language models without encountering resource constraints.

Your inbox needs more DevOps articles.

Subscribe to get our latest content by email.